mirror of

https://github.com/BookStackApp/BookStack.git

synced 2026-02-07 11:19:38 +03:00

Crawlers crawling pages with custom permissions #1703

Closed

opened 2026-02-05 01:39:54 +03:00 by OVERLORD

·

5 comments

No Branch/Tag Specified

development

further_theme_development

l10n_development

release

llm_only

vectors

v25-11

docker_env

drawio_rendering

user_permissions

ldap_host_failover

svg_image

prosemirror

captcha_example

fix/video-export

v25.12.3

v25.12.2

v25.12.1

v25.12

v25.11.6

v25.11.5

v25.11.4

v24.11.4

v25.11.3

v25.11.2

v25.11.1

v25.11

v25.07.3

v25.07.2

v25.07.1

v25.07

v25.05.2

v25.05.1

v25.05

v25.02.5

v25.02.4

v25.02.3

v25.02.2

v25.02.1

v25.02

v24.12.1

v24.12

v24.10.3

v24.10.2

v24.10.1

v24.10

v24.05.4

v24.05.3

v24.05.2

v24.05.1

v24.05

v24.02.3

v24.02.2

v24.02.1

v24.02

v23.12.3

v23.12.2

v23.12.1

v23.12

v23.10.4

v23.10.3

v23.10.2

v23.10.1

v23.10

v23.08.3

v23.08.2

v23.08.1

v23.08

v23.06.2

v23.06.1

v23.06

v23.05.2

v23.05.1

v23.05

v23.02.3

v23.02.2

v23.02.1

v23.02

v23.01.1

v23.01

v22.11.1

v22.11

v22.10.2

v22.10.1

v22.10

v22.09.1

v22.09

v22.07.3

v22.07.2

v22.07.1

v22.07

v22.06.2

v22.06.1

v22.06

v22.04.2

v22.04.1

v22.04

v22.03.1

v22.03

v22.02.3

v22.02.2

v22.02.1

v22.02

v21.12.5

v21.12.4

v21.12.3

v21.12.2

v21.12.1

v21.12

v21.11.3

v21.11.2

v21.11.1

v21.11

v21.10.3

v21.10.2

v21.10.1

v21.10

v21.08.6

v21.08.5

v21.08.4

v21.08.3

v21.08.2

v21.08.1

v21.08

v21.05.4

v21.05.3

v21.05.2

v21.05.1

v21.05

v21.04.6

v21.04.5

v21.04.4

v21.04.3

v21.04.2

v21.04.1

v21.04

v0.31.8

v0.31.7

v0.31.6

v0.31.5

v0.31.4

v0.31.3

v0.31.2

v0.31.1

v0.31.0

v0.30.7

v0.30.6

v0.30.5

v0.30.4

v0.30.3

v0.30.2

v0.30.1

v0.30.0

v0.29.3

v0.29.2

v0.29.1

v0.29.0

v0.28.3

v0.28.2

v0.28.1

v0.28.0

v0.27.5

v0.27.4

v0.27.3

v0.27.2

v0.27.1

v0.27

v0.26.4

v0.26.3

v0.26.2

v0.26.1

v0.26.0

v0.25.5

v0.25.4

v0.25.3

v0.25.2

v0.25.1

v0.25.0

v0.24.3

v0.24.2

v0.24.1

v0.24.0

v0.23.2

v0.23.1

v0.23.0

v0.22.0

v0.21.0

v0.20.3

v0.20.2

v0.20.1

v0.20.0

v0.19.0

v0.18.5

v0.18.4

v0.18.3

v0.18.2

v0.18.1

v0.18.0

v0.17.4

v0.17.3

v0.17.2

v0.17.1

v0.17.0

v0.16.3

v0.16.2

v0.16.1

v0.16.0

v0.15.3

v0.15.2

v0.15.1

v0.15.0

v0.14.3

v0.14.2

v0.14.1

v0.14.0

v0.13.1

v0.13.0

v0.12.2

v0.12.1

v0.12.0

v0.11.2

v0.11.1

v0.11.0

v0.10.0

v0.9.3

v0.9.2

v0.9.1

v0.9.0

v0.8.2

v0.8.1

v0.8.0

v0.7.6

v0.7.5

v0.7.4

v0.7.3

0.7.2

v.0.7.1

v0.7.0

v0.6.3

v0.6.2

v0.6.1

v0.6.0

v0.5.0

Labels

Clear labels

🎨 Design

📖 Docs Update

🐛 Bug

🐛 Bug

:cat2:🐈 Possible duplicate

💿 Database

☕ Open to discussion

💻 Front-End

🐕 Support

🚪 Authentication

🌍 Translations

🔌 API Task

🏭 Back-End

⛲ Upstream

🔨 Feature Request

🛠️ Enhancement

🛠️ Enhancement

🛠️ Enhancement

❤️ Happy feedback

🔒 Security

🔍 Pending Validation

💆 UX

📝 WYSIWYG Editor

🌔 Out of scope

🔩 API Request

:octocat: Admin/Meta

🖌️ View Customization

❓ Question

🚀 Priority

🛡️ Blocked

🚚 Export System

♿ A11y

🔧 Maintenance

> Markdown Editor

pull-request

Mirrored from GitHub Pull Request

No Label

Milestone

No items

No Milestone

Projects

Clear projects

No project

Notifications

Due Date

No due date set.

Dependencies

No dependencies set.

Reference: starred/BookStack#1703

Reference in New Issue

Block a user

Blocking a user prevents them from interacting with repositories, such as opening or commenting on pull requests or issues. Learn more about blocking a user.

Delete Branch "%!s()"

Deleting a branch is permanent. Although the deleted branch may continue to exist for a short time before it actually gets removed, it CANNOT be undone in most cases. Continue?

Originally created by @userbradley on GitHub (May 3, 2020).

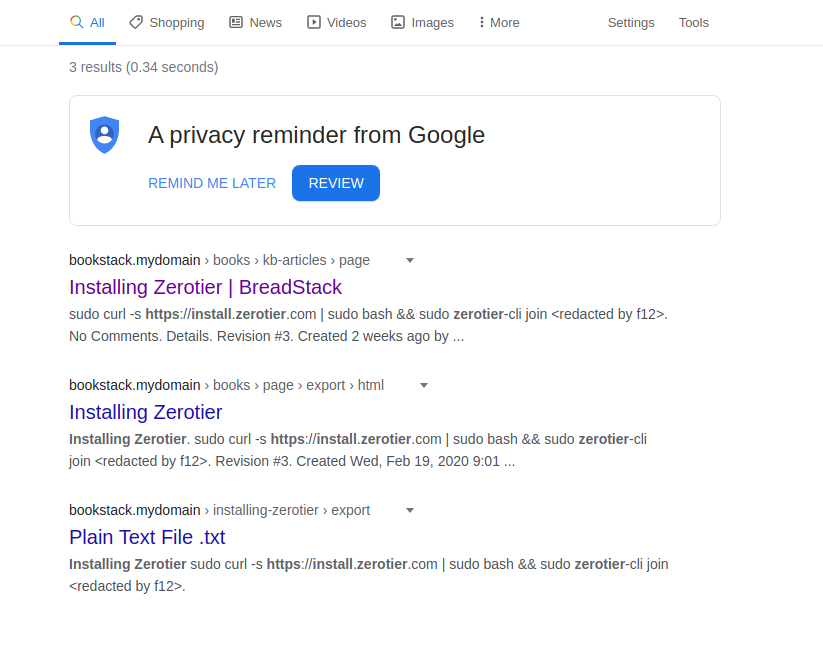

I have some pages on my bookstack that are not accessible by the public login or from public access stand point, but google has indexed these pages and exported them as text documents

Steps To Reproduce

Steps to reproduce the behavior:

Expected behaviour

Page will not be crawled or show up in search engines

Actual outcome

Pages show up in google

Google exports the page as a pdf and a txt file

66.249.79.119 - - [02/May/2020:02:47:51 +0000] "GET /books/kb-articles/page/cachet/export/pdf HTTP/1.1" 200 702324 "-" "Mozilla/5.0 (Linux; Android 6.0.1; Nexus 5X Build/MMB29P) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/41.0.2272.96 Mobile Safari/537.36 (compatible; Googlebot/2.1; +http://www.google.com/bot.html)"Screenshots

Google:

.

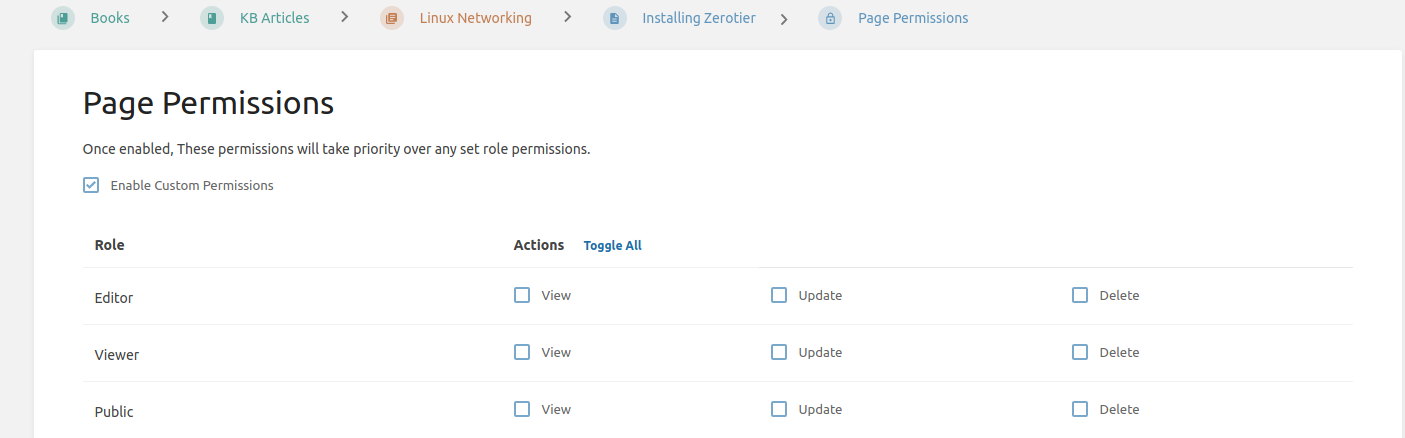

Permisions:

Your Configuration (please complete the following information):

PHP 7.4.5 (cli) (built: Apr 19 2020 07:36:30) ( NTS )Additional context

N/a

@ssddanbrown commented on GitHub (May 3, 2020):

Hi @userbradley ,

This is troubling. Just to confirm, has the page ever been public? Do you have a caching/cdn layer in front of BookStack, or some custom caching nginx rules set?

@ssddanbrown commented on GitHub (May 3, 2020):

Looking at the cached version of the page, it's as if page permissions were not active at the time of caching. Since it does not show "Page Permissions Active" under details.

@userbradley commented on GitHub (May 3, 2020):

Hi @ssddanbrown this page has never been public (that I know of). I have got permissions on the page to disallow anyone from viewing it publicly.

try for your self: https://bookstack.breadnet.co.uk/books/kb-articles/page/installing-zerotier

In terms of caching, no. It's a default nginx config.

Based off what you've said and looking at the cached page it seems like this was user error on my behalf as the caching is from 5th of march which I can only assume is when it could have been made public.

Part that confuses me is I've never allowed crawling of my site so I'm going to need to look on google search console to see whats up.

Thanks for your help

@ssddanbrown commented on GitHub (May 4, 2020):

Here's the available

.envoption to control this:d3ec38bee3/.env.example.complete (L262-L266)If not-set, or null, then BookStack will allow robots or not depending on whether the site is publicly viewable or not.

If you think BookStack is not following that logic then let me know and I can have a look into it.

It is possible to override the robots.txt file completely to set your own rules.

@userbradley commented on GitHub (May 9, 2020):

Thanks! It seems like it was purely down to user error :/

I will close this one! Ty